Feature image via MIT Technology Review.

Gabriella Skoff

For the first time, the environmental impact of training AI in natural language processing (NLP) has been quantified, and the results are jarring. The lifecycle of training an NLP deep learning algorithm has been calculated in a new paper to produce the equivalent of 626,000 pounds of carbon dioxide—nearly five times as much as the lifetime emissions of the average American car, including the manufacturing process. The paper brings new life to a parallel aptly drawn by Karen Ho in her article on the research for the MIT Technology Review: The comparison of data to oil now extends beyond its value in today’s society to also encompass the massive costs it weighs on the natural environment as an industry practice. The team of researchers found that the highest scoring deep learning models for NLP tasks were the most “computationally-hungry”; far more energy is demanded to train them due to their voracious appetite for data, the essential resource needed to create better AI. Data crunching is a space in which quantum computing is expected to lend a critical advantage to deep learning. Could it also help to curb AI’s carbon footprint?

The environmental impact of data is not often discussed, due in part to its lack of visibility. Fossil fuel plants can dominate the skyline, the plumes of smoke billowing above them have come to symbolise a problematic issue for many. Documentaries have taught many of us that even cows have a surprisingly large impact on climate change due to their production of methane gas. Data centres, however, are far less ubiquitous polluters, though their impact is substantial. The global ICT system is estimated to require about 1,500 terawatt-hours of power per year. To put that into context, that’s about 10% of the world’s total electricity generation. Given that the majority of the world’s energy is still produced by fossil fuels, the biggest contributor to climate change, this represents a serious challenge that few seem to be talking about.

As computers become more powerful, their power-usage too increases. Supercomputers are known to be incredibly gluttonous when it comes to energy consumption. In 2013, China’s Tianhe-2, a supercomputer with a generating capacity of 33.9 petaflops, was one of the most energy-intensive computers in the world, using 17.8 megawatts of power. Tianhe-2’s electricity consumption is about the same amount of energy required to power an entire town of around 36,000 people. While supercomputers today are used for anything from climate modelling to designing new nuclear weapons, many of the next generation of supercomputers are being tailor-made to train AI.

The U.S. Department of Energy Oak Ridge National Laboratory’s (ORNL) Summit supercomputer is the first supercomputer to be specifically built for AI applications. Summit is capable at peak performance of 200 petaflops, establishing the U.S.’s ascent to top-player in the supercomputing world, a place only recently taken from China. The U.S. aims to reach the next milestone, building a supercomputer capable of an exaflop (a billion billion calculations per second) by 2023. The numbers speak for themselves. A future reliance on these supercomputers to train AI will result in exponentially greater energy usage, by a factor that in today’s stubbornly reliant fossil fuel society would have a severely negative impact on climate change. While there are some looking toward other power alternatives for training AI, perhaps quantum computers, which require far less power than supercomputers, could support a more energy-efficient transition for AI training.

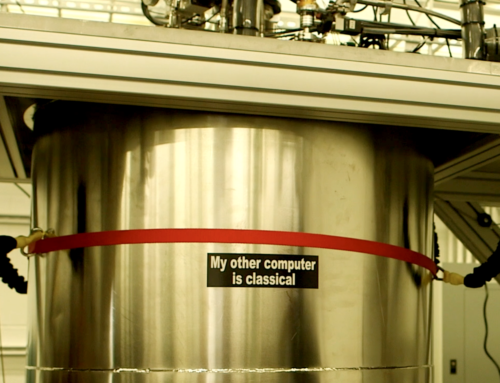

Currently, quantum computers still use more power than classical computers because of their extreme cooling requirements. Most quantum computers use cryogenic refrigerators to operate, which are immensely energy-inefficient. As such, the vast majority of a quantum computer’s energy usage is pulled directly to the cooling infrastructure required in order to operate it. However, the advantage of this refrigeration technique is critical in quantum computing: Operating at temperatures near absolute zero enables quantum processing to be superconducting. This allows them to process information using almost no power and generating almost no heat, resulting in an energy requirement of only a fraction of that of a classical computer. According to the ONLR, “quantum computers could reduce energy usage by more than 20 orders of magnitude to conventional [super]computers”.

Quantum is expected to lend an essential boost to AI and could be used for more effective training in deep learning tasks such as NLP in the future. While the operating costs on the environment of quantum computers may be high due to their cooling requirements, novel cooling techniques are being explored, which could one-day present potential solutions for quantum’s power problem. As the AI industry continues to grow exponentially, it is imperative that its environmental impact be considered in order to direct a more responsible development of the sector. Even with the high level of operational energy usage factored in, quantum computers present a distinct energy efficient advantage over supercomputers and could be used to help curb the carbon footprint of training tomorrow’s AI.

[…] shows that the highest-scoring deep-learning models are also the most computationally-hungry, due to their huge consumption of data. One algorithm’s lifecycle was found to produce the […]

[…] shows that the highest-scoring deep-learning models are also the most computationally-hungry, due to their huge consumption of data. One algorithm’s lifecycle was found to produce the […]

[…] shows that the highest-scoring deep-learning models are also the most computationally-hungry, due to their huge consumption of data. One algorithm’s lifecycle was found to produce the […]

[…] shows that the highest-scoring deep-learning models are also the most computationally-hungry, due to their huge consumption of data. One algorithm’s lifecycle was found to produce the […]

[…] shows that the highest-scoring deep-learning models are also the most computationally-hungry, due to their huge consumption of data. One algorithm’s lifecycle was found to produce the […]

[…] shows that the highest-scoring deep-learning models are also the most computationally-hungry, due to their huge consumption of data. One algorithm’s lifecycle was found to produce the […]

[…] shows that the highest-scoring deep-learning models are also the most computationally-hungry, due to their huge consumption of data. One algorithm’s lifecycle was found to produce the […]

[…] shows that the highest-scoring deep-learning models are also the most computationally-hungry, due to their huge consumption of data. One algorithm’s lifecycle was found to produce the […]

[…] shows that the highest-scoring deep-learning models are also the most computationally-hungry, due to their huge consumption of data. One algorithm’s lifecycle was found to produce the […]

[…] shows that the highest-scoring deep-learning models are also the most computationally-hungry, due to their huge consumption of data. One algorithm’s lifecycle was found to produce the […]

[…] shows that the highest-scoring deep-learning models are also the most computationally-hungry, due to their huge consumption of data. One algorithm’s lifecycle was found to produce the […]

[…] shows that the highest scoring deep learning models are also the most computationally intensive, because of their huge data usage. The life cycle of one algorithm was found to produce the […]

[…] shows that the highest-scoring deep-learning models are also the most computationally-hungry, due to their huge consumption of data. One algorithm’s lifecycle was found to produce the […]