Alexander Vipond

British AI firm DeepMind has a penchant for playing games. From Atari to Go, DeepMind researchers have been training AI agents to compete against themselves and beat human competitors.

The latest challenge was a specially modified version of a gaming classic Quake III A multiplayer, first-person shooter video game from the turn of the millennium, Quake III sees teams of aliens and humans vie for supremacy. Deep Mind have taken this premise to the next level: now it is AI agents versus humans.

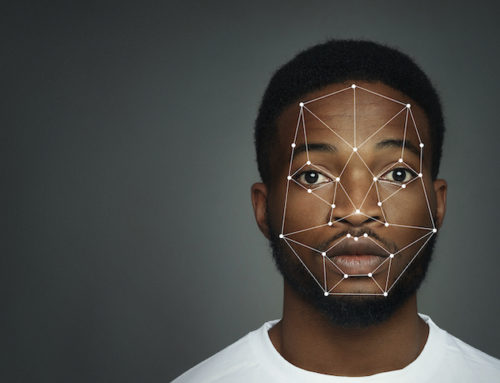

Through reinforcement learning, AI agents were tasked with learning to capture the enemy flag from their opponents’ base over a series of procedurally generated environments in which no two games were the same. The aim was to see if AI agents could learn strategies and cooperate together in complex three-dimensional environments with imperfect information, that is, without knowing where the other team’s players are. To do this, Deep Mind created a new type of agent dubbed FTW (For the Win) that could successfully rise above traditional methods of AI competition and exceed human standards.

By training a whole population of FTW agents in two timescales (fast and slow), thereby increasing agents’ consistency, and using a two-tiered rewards scheme to incentivise action regardless of a win or loss, FTW agents were able to learn a higher standard of gameplay. Training a population in parallel not only proved to be more efficient but revealed a diversity of approaches, as agents optimised their own unique internal reward signals.

Credit: DeepMind

After an average of 450,000 training games, the FTW agents were placed in a tournament with forty human players who they could play with as teammates or adversaries. In order to ensure a fair fight, researchers engineered balance into the game to counter the AI agents’ reaction time advantage over the human eye’s natural frame rate processing limit (lower than the game’s 60 frames per second).

On average, FTW agents achieved a higher win-rate than human players with strong skill scores. The agents learnt how to follow teammates, defend their base and “camp” at the enemies’ base, picking off players as they spawned. They achieved the greatest collaboration in teams of four, although struggled to maintain this as the number of players expanded.

Credit: DeepMind

As usual, it’s not the game itself that represents progress here but the evolving capacity for AI agents to develop cooperative behaviour. DeepMind has demonstrated the capability of AI agents to work in small teams, alongside humans and other AI agents, towards a shared goal. The more AI agents can work together to manage uncertain environments and imperfect knowledge the better they will perform when faced with the chaos of the real world.

Leave a Reply