Image Credit: Centre for Translational Data Science, University of Sydney.

Alexander Vipond

At the recent Ethics of Data Science conference, hosted by the Centre for Translational Data Science at the University of Sydney, an interdisciplinary panoply of software engineers, machine learning experts, clinicians and lawyers came together to discuss how artificial intelligence and big data is changing society.

What quickly became clear was that technological advancement in the field is moving so fast that participants were grappling with not only the recent and future impacts on their industries but the sheer pace of technological change itself.

Some presenters argued that the recent talk of ethical AI principles from big tech companies was merely a form of ethics washing, a strategic ploy to delay and weaken regulation on privacy, dangerous content and data rights. Other speakers opined that ethics were simply not enough: that in order for them to be of real value to society we need to move beyond ethical principles to enforceable laws, changes to organisational cultures and clear regulations.

Many of the legal experts in attendance outlined the knowledge gap between technologists and other parts of society, citing the need to properly educate judges, policymakers and politicians on AI so they can make informed decisions. These arguments highlighted the Australian Government’s recent push to strengthen penalties for companies who breach privacy regulations, accompanied by an increase in funding for the Office of the Information Commissioner to pursue data breaches. The recent acknowledgement of Attorney General Christian Porter, as well as by panelists at the conference, that Australian data laws are insufficient to protect citizens in the current environment led to many proposals for change.

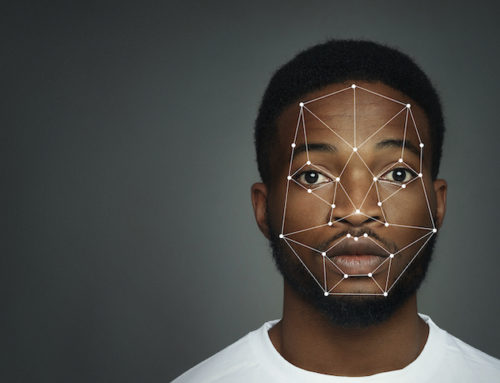

These included Australian states joining the European Union’s General Data Protection Regulation and adopting international human rights law as a framework for wider regulation of emerging technologies. There was also a concerted focus on how to protect marginalised communities most at risk of exploitation. For example, many presenters noted algorithms that reinforced racism in US prison sentencing or sexism in recruitment practices.

On this front, many of the technical presentations delivered a variety of methods to ensure greater fairness in the design process of machine learning algorithms and outlined the important technical limitations and trade offs that needed to be considered when companies want to harness the power of artificial intelligence. The difference between ethical principles and the formal mathematical models used to embed them in tech, the types of questions machine learning can and can’t answer, and how to reduce bias in data-sets gave the interdisciplinary audience a display of the improvements that could be made with creative thinking and a consideration of a broader worldview.

This gave rise to questions of how to address inclusiveness in the industry and the geopolitical spectre of business and state-based competition. For while this has led to huge investment it has also prompted a new technological race, the consequences of which must be balanced so that positive breakthroughs for society can be maximised and risks can be addressed. The foundation of clear laws and a national strategy on AI in Australia (with funding to support implementation) are yet to be laid. The conference gave participants a window into what organisational coordination and creative solutions could be embraced with strong leadership from government and industry.

The author would like to thank Dr Roman Marchant, Professor Sally Cripps, Professor Nick Enfield and the Advisory board for organising the conference.

Leave a Reply