Susannah Lai

The second day of this year’s Q Symposium greeted attendees with a rousing 9 am lecture on Microphysics; a subject heavily focusing on the rather technical aspects of quantum physics, particularly aimed at leading edge technologies. The panel was chaired by Professor Steve Bartlett of Sydney University’s Nano Institute, with Dr Andrea Blanco-Redondo, Professor Gavin Brennen and Dr Benjamin Brown. All the speakers primarily focused on introducing their respective avenues of research, with some evaluation of where developments in the area could go and what successes in the area could contribute to quantum as a field or to technology in general.

Professor Bartlett started by making clear the position of scientists in general with regard to working with policy makers. He argued that they are open to dialogue and are eager to try to explain the complexities of their positions and figure out ways to create ethical policies to ensure that their research develops in a safe manner, without damaging the progress of the research itself.

Addressing the topic of the ‘quantum race’, he said that if it was a ‘race’ then it is a long one, as the field is still very much in its infancy despite the sweeping and dramatic claims about what the technology will be able to do. He mused that the scientific community is not even sure if there is a ‘finish line’ let alone what that would look like, except that it was probably not Skynet, and it certainly won’t be Skynet without any warning.

He then went on to explain that from the perspective of researchers in the field, this ‘race’ is more of a ‘fun run’ than anything else; it isn’t thought of in nationalistic terms, and furthermore, no matter who wins, in his opinion, all the participants will be happy for each other and congratulating each other. Scientists and researchers are, Professor Bartlett asserted, unapologetic ‘techo-optimists’ and will always approach matters with that attitude. Further, he argued that no one in the scientific community is thinking solely of ‘achieving quantum supremacy’ for their country.

The first to speak was Dr Andrea Blanco-Redondo of Sydney University’s CUDOS research group, which focuses on photonics. Dr Blanco-Redondo’s particular area of study is creating ‘fragile quantum states’ using photonics. The concept behind this is that the technology could be used to encode photon qubits, with binary values assigned to the degrees of freedom that the photon possesses. That is, the direction of the wave of the photon. The quantum element of super-position here expands the number of states and assignable values to six. The difficulty in employing this technology comes from forcing the photons to interact in order to perform computations.

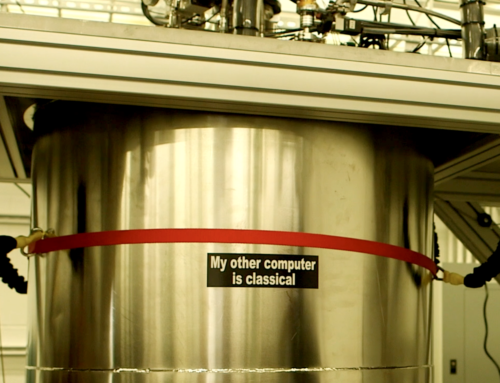

However, she has high hopes for photonics as an avenue for quantum computing as photons travel fast, do not interact with the environment or ‘decolourize’, and operate at room temperature on a chip (an important consideration if quantum computers are to be distributable at any practical level, since the currently available qubits are held in large facilities with most of the space dedicated to cooling them). Still, the problems Dr Blanco-Redondo mentioned remain: photons are extremely sensitive to material faults and forcing interaction is difficult.

Dr Blanco-Redondo then explained that earlier in the development of quantum technology, investment was not largely focused in the area of photonics. Companies like Microsoft and Google invested in other avenues, but more recent investors have been backing photonics. In terms of the state of development in the industry, she said, quantum machines of any kind are still far off; even relatively simple, specialized machines such as a quantum device that can break encryption. Fundamentally, Dr Blanco-Redondo explained, scientists are mostly just trying to understand the universe around them better.

Professor Gavin Brennen of Macquarie University’s CQuIC spoke next; his focus was on the discovery of anyons, whether by creating them or simply finding them in the right context. This area is also the current focus of Micrsoft’s research into creating qubits. Theoretically, anyons exist in two dimensional areas where one particle is wound around another. This changes the nature of the path; it cannot be warped to exclude one particle. When a ‘periodic table’ of anyons was theorized, there appeared to be a possibility of anyons that are powerful enough to do quantum computing, so it would be possible to create cells of anyons, and perform computations by moving specific anyons between cells, and observing the results. Currently, work is being done to create anyon arrays at the end of ‘comb’ fibres.

In terms of understanding the potential impact of this research on society, a ‘quantum risk analysis’ was performed, according to Professor Brennen. This involved speculating on the development of quantum chips, their direction and possible computing power, as well as the development of sufficient error correction in the resulting computations to make calculations accurate. Developments in noise reduction put rate of accuracy in qubits at 77.1%, as opposed the near 100% accuracy of conventional computing. More recent developments purport to bring that accuracy up via ‘zero-noise extrapolation’, but even then, there are non-insignificant error rates involved. Understanding what that would mean for the possible breaking of RSA 256 (the current industry standard for encryption) was also part of the risk assessment. The development time frame estimated for this was perhaps around 2035 to 2060.

Fittingly, the third speaker, Dr Benjamin Brown, also of the University of Sydney, is working in the field of quantum error correction. As with other areas of development in quantum computing, this type of research is being done at multiple institutes, pursuing various avenues. Also, similarly to Professor Bartlett’s claim, Dr Brown said that it is more of a friendly quantum ‘race’, characterized by international collaboration between teams in Europe and Silicon Valley and mostly within Google and Microsoft.

The main motive behind the work being done is the fact that qubits are inevitably going to go wrong, and thus require designs that allow for sufficient information to be retained in order to do worthwhile computations. Fundamentally, Dr Brown explain, information is physical; it is stored in physical mediums, whether they be bits or qubits or some other type of switch. On a conventional hard drive, error correction largely takes care of itself internally; the use of magnetic fields means that even if an atom flips to the wrong direction, the other atoms surrounding it, correctly aligned, will force it to flip back. For qubits, this doesn’t happen in three dimensions but requires four dimensions to be effective and there is a distinct lack of four-dimensional space available.

This means that error correction must be external, forcibly reviewing the data stored. This is the area where Dr Brown is working, trying to figure out a system wherein even if the rest of the qubits fail, a core of preserved, correct data is able to maintain integrity.

Following this, the floor was opened to questions, which mostly surrounded the possible implications of the mentioned developments on society with respect to current technology and timelines for both the development of said technology versus the development of relevant policy. The first question related to the current use of RSA 256, and what would happen when quantum computing broke it. The reply was that hopefully important secrets and transactions would have been moved to more secure methods of encryption by then. If there is still important information being encrypted with RSA 256 after it is broken it would be a major issue. The next question concerned the typical policy lag behind technological development (about 20 years), and whether or not there would be sufficient frameworks in place when the technology really starts to develop and, presumably, enter a boom period. In response, it was mentioned that the US has already started trying to develop relevant frameworks and that good work is being done in that area.

Other questions followed. One was a question around the claims of collaboration between teams of scientists, particularly, across borders, as the development of potentially militarized technology is not normally given to collaboration. As such, it was somewhat expected that researchers would ‘disappear’ when their technology approached possible military applications. Answering this was the assertion that in most fields, research is still highly collaborative and there has been little to no ‘vanishing’, except in the area of quantum key distribution where there is a definite trend of this occurring with increasing frequency as the field develops to the point of real-world application.

The last question asked about the possibility of ‘group think’ occurring between researchers as they assess the likelihood of negative impacts, especially since there is a temptation to think optimistically on the subject. Dr Blanco-Redondo and Dr Brown commented that their areas were so small and specific that they had not really considered this, as it was their impression that there would be little overall impact from developments in their fields. However, Professor Brennen did express his concern, specifically if scientists and technology-based researchers who perform risk analysis were overreaching in their expertise, but hoped that further dialogue between the technical side and the policy side would make it work out.

Leave a Reply