In 1989, Sir Roger Penrose argued artificial intelligence was beyond human inventive capacity. For thirty years he’s been right. As the prospect of quantum computing dawns, is there reason to believe Penrose might finally be proven wrong?

In early October, it was announced that Sir Roger Penrose would be the joint-recipient of the 2020 Nobel Prize in Physics for his discovery that “black hole formation is a robust prediction of the general theory of relativity.” Although Penrose’s Nobel address on 10th December will be no doubt fascinating, let’s delve further back into the mists of time and examine one of his bold predictions. A prediction that, so far, has received little coverage by a media lavishing well deserved praise on this soon-to-be Nobel inductee.

Thirty years ago, Penrose dedicated an entire book to the argument that Artificial Intelligence (AI) was a physical and technological impossibility. He claimed we have neither the technological capacity, nor the understanding of human consciousness to make it a reality. Despite this cautionary tale, the planet’s three largest economic blocs (the United States, China, and the European Union), as well as the world’s largest corporations, are separately competing, investing, and striving towards this very endeavour. On the one side, we have arguably the world’s most brilliant mind, and on the other, a planet’s worth of political and economic power. The former says AI can’t and won’t happen; the latter are trying to make sure it does. It is Roger Penrose versus the world. For 30 years Penrose has been right. The question is, for how long will he retain the upper hand?

Penrose’s argument boils down to a belief that: (a) we don’t understand physics well enough to use it to describe our brains; (b) we don’t understand the mind well enough to create a framework capable of accommodating human consciousness; and (c) since our minds do not operate ‘computationally’ and are non-algorithmic, our intelligence, therefore, can’t be recreated by computers. In short: artificial intelligence is beyond our wit.

Since Penrose wrote The Emperor’s New Mind, however, the game has changed. There’s a new kid on the block and this technological upstart presents a genuine challenge to all three of Penrose’s assumptions. The great disrupter is of course quantum computing. If – and this is a big if – one of these powerful corporate or state actors pursuing the development of a quantum computer succeeds, artificial intelligence becomes a very real possibility.

You see, quantum computing leads to AI in a way that conventional computing does not. Unlike conventional computing, quantum computers are non-binary. Even the most advanced super computer sends and receives instructions based on bytes expressed as a series of 0s and 1s passing through some kind of a gate. A quantum computer, on the other hand, leverages instantaneous correlations across the system, while exploiting quantum bits (qubits) that can be 0 and 1 at the same time! The computational entities within a quantum computer are both entangled and in superposition. The resulting quantum device would be able to “demonstrate the resolution of a problem that, with current techniques on a supercomputer, would take longer than the age of the universe.” It follows that once we have a quantum computer, we’ll soon achieve AI since we would possess a framework capable of resolving a problem as complex, dynamic, and interrelated as human consciousness.

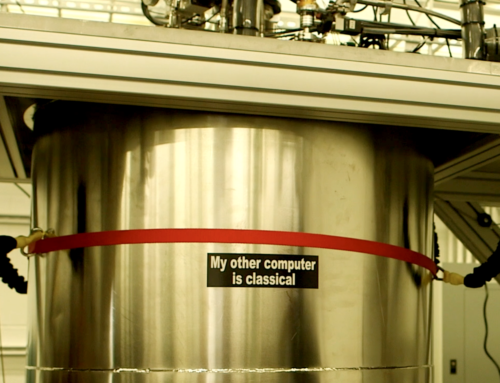

But there’s a snag. A pretty fundamental snag. In the end, we may never get a quantum computer…

This pessimism comes across when you read the latest report from Honeywell Quantum Solutions, a leading researcher in the field of quantum computing. Despite suggesting their proprietary architecture shows a “viable path toward large quantum computers,” Honeywell researchers simultaneously concede, a “host of difficulties… have precluded the architecture from being fully realized in the twenty years since its proposal.” There is a road block on the path to a quantum computer: while superposition and entanglement are features of the quantum mechanics that underpin the design of a quantum computer, it seems a separate (but equally relevant) feature of quantum theory has reared its ugly head.

The Honeywell researchers report (and don’t worry this jargon will be explained):

“Quantum logic operations are executed in the gate zones using lasers propagating parallel to the trap surface. The beams are focused tightly enough to address a single zone but not tight enough to allow for individual addressing within a single ion crystal. This means that entangling operations between arbitrary pairs, single qubit operations, measurement, and reset all require crystal rearrangement.”

Put simply, this means, in the context of the quantum computer, you get one expression of the quantum phenomenon at one time (say, qubits in superposition), and another expression of the quantum phenomenon at another time (say, the entanglement of qubits), but you don’t get entangled qubits in superposition at the same time. Why? Because to realise the superposition of a qubit requires one very specific experimental arrangement, and to achieve the entanglement of qubits requires another. That is to say, the superposition and entanglement of qubits are physically paradoxical – they are mutually exclusive physical states of the same phenomenon.

All of the above is nothing more than a complicated way of arguing that the construction of a quantum computer is governed by the very same principle that determines every other quantum experiment: uncertainty. Heisenberg was right in 1927 and remains right in 2020. Uncertainty is the long shadow of quantum mechanics, and the truism remains: you can’t outrun your own shadow.

So far, Sir Roger Penrose has the upper hand. If the construction of a quantum computer is governed by relations of uncertainty and, therefore, its realisation is entangled in a paradox, Penrose may never be proved wrong. On the other hand, our capacity for quantum invention, limited though it might be, may nevertheless create a form of computation somewhere between the binary of conventional computing and the entangled superposition of quantum. This is my hunch. In the short term, I believe we’ll create a near-quantum system that delivers resolutions many, many times more powerful than a super computer. Not quite longer-than-the-age-of-the-universe-better, but significant nevertheless. Indeed, if reports out of China are reliable, a near-quantum computer of this kind has just been born and named Jiŭzhāng. Jiŭzhāng, a photonic quantum computer, performs operations that would take “China’s Sunway TaihuLight supercomputer 2.5 billion years to run.” Near-quantum systems such as this may then lead to a form of machine learning that doesn’t quite rise to the level of AI, but is intuitive, dynamic, and adaptive to an extent well beyond our current capacity. This kind of machine learning may never pass a Turing test, but it may achieve near-organic computation delivering forms of pattern-recognition, synthesis, prediction, invention, and risk anticipation unlike anything we can presently refer to.

In the final analysis, I suggest Penrose will be right to the extent that we never achieve the specific kind of AI he was referring to, while, simultaneously, Penrose will be wrong to the extent that we do produce a form of machine learning that, for all intents and purposes, performs like most people’s expectations of AI. It appears, like so much in life, the verdict will remain in superposition: Penrose will be right and wrong at the same time, with the final result determined by how you measure the question.

Leave a Reply